Implementation Science: Evaluation Stage: Difference between revisions

No edit summary |

No edit summary |

||

| Line 11: | Line 11: | ||

</blockquote>The backbone of any implementation evaluation is having a clear evaluation purpose, and direct relevant and answerable evaluation questions that are aligned with the evaluation approaches and methods. Evaluations are typically associated with judging the effectiveness of the implementation process but can also inform decisions about the implementation process and outcomes of the implementation process. | </blockquote>The backbone of any implementation evaluation is having a clear evaluation purpose, and direct relevant and answerable evaluation questions that are aligned with the evaluation approaches and methods. Evaluations are typically associated with judging the effectiveness of the implementation process but can also inform decisions about the implementation process and outcomes of the implementation process. | ||

== Implementation Evaluation | == Implementation Evaluation and Contextual Challenges == | ||

“What will affect what you implement?” To refresh your memory, please see [https://www.physio-pedia.com/Implementation_Science:_Pre-Implementation_Stage#Understanding_Context this article] to review the multiple factors that may affect the successful implementation of evidence-based rehabilitation interventions. | “What will affect what you implement?” To refresh your memory, please see [https://www.physio-pedia.com/Implementation_Science:_Pre-Implementation_Stage#Understanding_Context this article] to review the multiple factors that may affect the successful implementation of evidence-based rehabilitation interventions. | ||

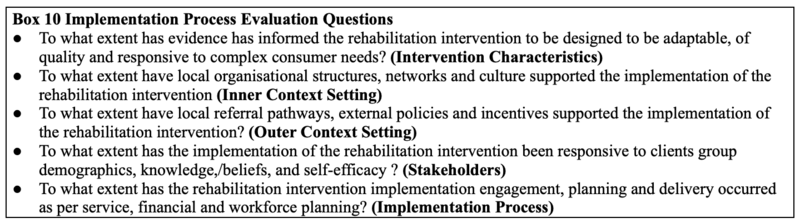

It is easy to become overwhelmed given the increasing quantity of information surrounding these multiple contextual challenges to implementing rehabilitation interventions. It is therefore beneficial to use a more comprehensive approach to evaluating which contextual factors have influence on implementation | It is easy to become overwhelmed given the increasing quantity of information surrounding these multiple contextual challenges to implementing rehabilitation interventions. It is therefore beneficial to use a more comprehensive approach to evaluating which contextual factors have influence on a particular implementation's outcome. '''Box 10''' provides several key implementation process evaluation questions. | ||

[[File:Implementation science box 10.png|center|thumb|800x800px|These implementation process evaluation questions are based upon several existing implementation science evaluation frameworks listed below.]] | [[File:Implementation science box 10.png|center|thumb|800x800px|These implementation process evaluation questions are based upon several existing implementation science evaluation frameworks listed below.]] | ||

'''Examples of implementation science evaluation frameworks that can identify barriers and facilitators to key implementation outcomes:''' | '''Examples of implementation science evaluation frameworks that can identify barriers and facilitators to key implementation outcomes:''' | ||

| Line 29: | Line 29: | ||

* The [https://sites.bu.edu/ciis/files/2016/06/PRECEDEPROCEED-Model-Cheat-Sheet_CGA.pdf Precede-Proceed Model of Health Program Planning & Evaluation] <ref>Allen CG, Barbero C, Shantharam S, Moeti R. [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6395551/ Is theory guiding our work? A scoping review on the use of implementation theories, frameworks, and models to bring community health workers into health care settings.] Journal of public health management and practice: JPHMP. 2019 Nov;25(6):571.</ref> | * The [https://sites.bu.edu/ciis/files/2016/06/PRECEDEPROCEED-Model-Cheat-Sheet_CGA.pdf Precede-Proceed Model of Health Program Planning & Evaluation] <ref>Allen CG, Barbero C, Shantharam S, Moeti R. [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6395551/ Is theory guiding our work? A scoping review on the use of implementation theories, frameworks, and models to bring community health workers into health care settings.] Journal of public health management and practice: JPHMP. 2019 Nov;25(6):571.</ref> | ||

== | == Implementation Evaluation and Implementation Strategies == | ||

“What will help what you implement?” To refresh your memory, please see [[Implementation Science: Implementation Stage#Implementation Strategy Classification|this article]] to review the multiple implementation strategies which support the successful implementation of evidence-based rehabilitation interventions. <blockquote>The five classes of implementation strategies: | |||

# Implementation process strategies | |||

# Dissemination strategies | |||

# Integration strategies | |||

# Capacity building strategies | |||

# Scale up strategies | |||

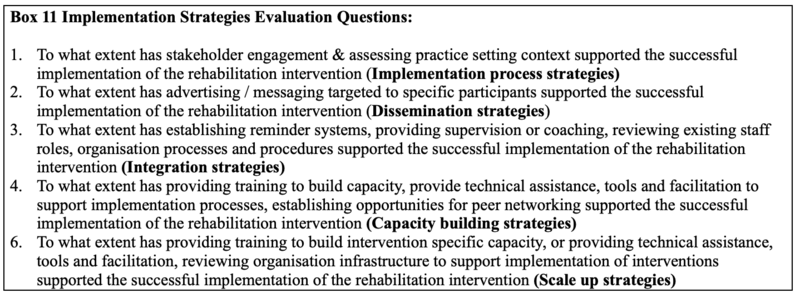

</blockquote>It is easy to become overwhelmed given all these implementation strategies. It is therefore beneficial to use a more comprehensive approach to evaluating have influence on a particular implementation strategy's outcome. '''Box 11''' provides several key implementation strategy evaluation questions. | |||

[[File:Implementation science box 11.png|center|thumb|800x800px|Key implementation strategy evaluation questions]] | |||

== Evaluate Implementation Outcomes == | |||

It is important to acknowledge that an unresolved issue in the field of implementation science is how to evaluate implementation effectiveness of evidence-based interventions. Distinguishing implementation effectiveness from intervention effectiveness is critical for transporting interventions from laboratory settings to real-world and or community settings. When such efforts failit is important to know if the failure occurred because the intervention was ineffective in the new setting (intervention failure), or if a good intervention was implemented incorrectly (implementation failure). <blockquote>'''Implementation Outcomes'''- refer to the effects of deliberate implementation strategies to adopt and embed new interventions, programs or practices into real world rehabilitation settings. </blockquote>Three clusters of implementation outcomes have been suggested: <ref name=":0">Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. [https://link.springer.com/article/10.1007/s10488-010-0319-7 Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda.] Administration and policy in mental health and mental health services research. 2011 Mar;38(2):65-76.</ref> | |||

# Implementation outcomes - the effects of implementation strategies undertaken to implement a new intervention such as: acceptability, adoption, appropriateness, uptake, feasibility, fidelity, implementation cost, penetration, and sustainability of the evidence-based rehabilitation interventions | |||

# Service system outcomes - the effects of interventions on service outcomes such as: efficiency, safety, effectiveness, equity, patient centredness, timeliness of the evidence-based rehabilitation interventions | |||

# Patient Outcomes -the effects of intervention on patient outcomes such as: changes in patient satisfaction function or symptomology as a result of the evidence-based rehabilitation interventions | |||

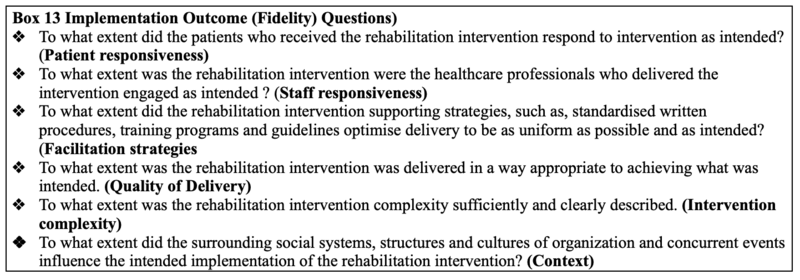

Measuring implementation outcomes in addition to client or service system outcomes is crucial for distinguishing effective or ineffective programs that are well or poorly implemented. While all three clusters of implementation outcomes are key to focus on – this article will mainly focus on implementation outcomes. '''Box 12''' provides definitions of implementation outcome dimensions adapted from Proctor et al. (2011) | |||

[[File:Implementation science box 12.png|center|thumb|800x800px|Definitions of implementation outcome dimensions<ref name=":0" />]] | |||

Given that there are eight implementation outcomes, once again it can be overwhelming as to how and what outcome dimension to select. There are several factors to consider when choosing which implementation outcomes to evaluate: | |||

Given that there are | |||

* the specific barriers to implementation you have observed | * the specific barriers to implementation you have observed | ||

| Line 64: | Line 61: | ||

The stage of implementation and your unit of analysis can also influence. For example, acceptability may be more appropriate to study during early implementation and sustainability may be more appropriately measured later in the implementation process. | The stage of implementation and your unit of analysis can also influence. For example, acceptability may be more appropriate to study during early implementation and sustainability may be more appropriately measured later in the implementation process. | ||

== Implementation Fidelity == | |||

Fidelity translates as “faithfulness”; thus, fidelity of intervention means faithful and correct implementation of the key components of a defined intervention. Unless such an evaluation is made, it cannot be determined whether a lack of impact is due to poor implementation or inadequacies inherent in the intervention in the real-world setting. Evidence-based practice also assumes that an intervention is being implemented in full accordance with its published details. This is particularly important given the greater potential for inconsistencies in implementation of an intervention in real world rather than experimental conditions. Evidence-based practice needs a means of evaluating whether the intervention is actually being implemented as the designers intended. <blockquote>Implementation fidelity can be described in terms of three key elements that need to be measured including: | |||

# Adherence to an intervention - whether an intervention is being delivered as it was designed or written as far as content of the intervention; the exposure or dose of an intervention received by participants | |||

# Intervention complexity - complex interventions have greater scope for variation in their delivery, therefore are more vulnerable to one or more components not being correctly implemented | |||

# Facilitation strategies - the provision of manuals, guidelines, training, monitoring and feedback, capacity building, and incentives: | |||

#* Quality of delivery refers to the manner in which a practitioner or administrator or volunteer delivers an intervention | |||

#* Participant responsiveness measures how far participants respond to, or are engaged by, an intervention | |||

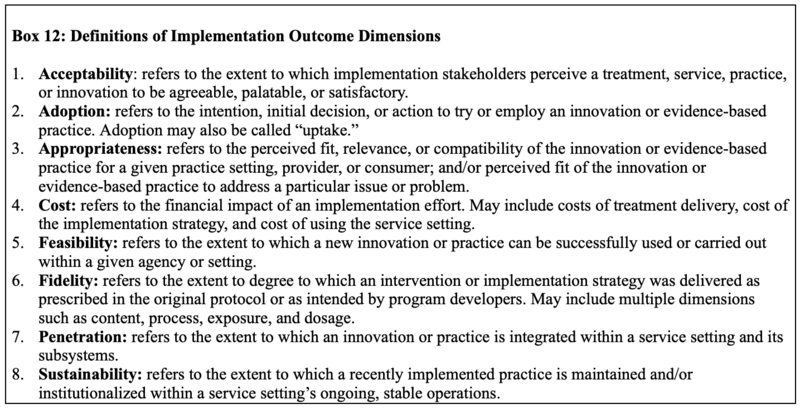

</blockquote>It is easy to become overwhelmed given the large number of elements that may influence implementation fidelity. It is therefore beneficial to use a more comprehensive approach to evaluating the influence on the implementation fidelity outcomes. '''Box 13''' provides several key implementation outcome (fidelity) evaluation questions. | |||

[[File:Implementation science box 13.png|center|thumb|800x800px|Key implementation outcome (fidelity) evaluation questions]] | |||

== | == Summary == | ||

Given the multiple challenges to implementing rehabilitation interventions it is important to think about and assess both the implementation process and the outcomes of your implementation process. To inform future implementation facilitation efforts, it is important to know not just ''what'' worked but ''how'' and ''why'' the selected implementation strategies performed. | |||

It is important to distinguish implementation effectiveness from intervention effectiveness. Given that there are multiple implementation effectiveness outcomes be select about implementation outcomes to assess. | |||

== Resources == | |||

'''Recommended Reading:''' | |||

*Allen CG, Barbero C, Shantharam S, Moeti R. Is theory guiding our work? A scoping review on the use of implementation theories, frameworks, and models to bring community health workers into health care settings.Journal of public health management and practice: JPHMP. 2019 Nov;25(6):571. | |||

*Khadjesari Z, Boufkhed S, Vitoratou S, Schatte L, Ziemann A, Daskalopoulou C, Uglik-Marucha E, Sevdalis N, Hull L. Implementation outcome instruments for use in physical healthcare settings: a systematic review. Implementation Science. 2020 Dec;15(1):1-6. | |||

'''Additional Implementation Outcome Measurement Tools:''' | |||

Given the complexity of thinking and measuring implementation outcomes, for more detailed information about instruments to measure implementation outcomes please review: | |||

* Washington University in St. Louis, Institute of Clinical and Translational Sciences: [https://cpb-us-w2.wpmucdn.com/sites.wustl.edu/dist/6/786/files/2017/08/DIRC-implementation-outcomes-tool-dg_7-27-17_ab-27xbrka.pdf Implementation Outcomes Toolkit] | |||

* [https://implementationoutcomerepository.org Implementation Outcome Repository] <ref>Khadjesari Z, Boufkhed S, Vitoratou S, Schatte L, Ziemann A, Daskalopoulou C, Uglik-Marucha E, Sevdalis N, Hull L. [https://pubmed.ncbi.nlm.nih.gov/32811517/ Implementation outcome instruments for use in physical healthcare settings: a systematic review.] Implementation Science. 2020 Dec;15(1):1-6.</ref> | |||

* Center for Technology and Behavioral Health: [https://www.c4tbh.org/resources/measures-for-implementation-studies/ Measures for Implementation Research] <ref name=":0" /> | |||

== References == | == References == | ||

<references /> | <references /> | ||

Revision as of 05:14, 12 May 2022

Top Contributors - Stacy Schiurring, Tarina van der Stockt, Kim Jackson and Jess Bell

An Implementation Evaluation Mindset[edit | edit source]

The term evaluation has many meanings and myths associated with it, leading to confusion, fear and resistance to its benefits.

Evaluation involves all of the following:

- gathering reliable and valid information in a systematic way from all intervention stakeholders

- attributing value to the intervention implementation process and strategies or outcomes of implementation process

- informing future rehabilitation intervention decision-making

The backbone of any implementation evaluation is having a clear evaluation purpose, and direct relevant and answerable evaluation questions that are aligned with the evaluation approaches and methods. Evaluations are typically associated with judging the effectiveness of the implementation process but can also inform decisions about the implementation process and outcomes of the implementation process.

Implementation Evaluation and Contextual Challenges[edit | edit source]

“What will affect what you implement?” To refresh your memory, please see this article to review the multiple factors that may affect the successful implementation of evidence-based rehabilitation interventions.

It is easy to become overwhelmed given the increasing quantity of information surrounding these multiple contextual challenges to implementing rehabilitation interventions. It is therefore beneficial to use a more comprehensive approach to evaluating which contextual factors have influence on a particular implementation's outcome. Box 10 provides several key implementation process evaluation questions.

Examples of implementation science evaluation frameworks that can identify barriers and facilitators to key implementation outcomes:

- Consolidated Framework for Implementation Research (CFIR)

- Theoretical Domains Framework (TDF)[1]

- Integrating Promoting Action on Research Implementation in Health Services (I-PARIHS)[2][3]

- Exploration, Preparation, Implementation and Sustainment (EPIS)

There are many other evaluation frameworks that are consistently used evaluate the effectiveness of evidence-informed rehabilitation interventions. This type of framework includes:

- The Reach, Effectiveness, Adoption, Implementation, Maintenance (RE-AIM) framework[4][5]: used to evaluate the success of implementation and impact of translating research to “real-world” conditions

- The Precede-Proceed Model of Health Program Planning & Evaluation [6]

Implementation Evaluation and Implementation Strategies[edit | edit source]

“What will help what you implement?” To refresh your memory, please see this article to review the multiple implementation strategies which support the successful implementation of evidence-based rehabilitation interventions.

The five classes of implementation strategies:

- Implementation process strategies

- Dissemination strategies

- Integration strategies

- Capacity building strategies

- Scale up strategies

It is easy to become overwhelmed given all these implementation strategies. It is therefore beneficial to use a more comprehensive approach to evaluating have influence on a particular implementation strategy's outcome. Box 11 provides several key implementation strategy evaluation questions.

Evaluate Implementation Outcomes[edit | edit source]

It is important to acknowledge that an unresolved issue in the field of implementation science is how to evaluate implementation effectiveness of evidence-based interventions. Distinguishing implementation effectiveness from intervention effectiveness is critical for transporting interventions from laboratory settings to real-world and or community settings. When such efforts failit is important to know if the failure occurred because the intervention was ineffective in the new setting (intervention failure), or if a good intervention was implemented incorrectly (implementation failure).

Implementation Outcomes- refer to the effects of deliberate implementation strategies to adopt and embed new interventions, programs or practices into real world rehabilitation settings.

Three clusters of implementation outcomes have been suggested: [7]

- Implementation outcomes - the effects of implementation strategies undertaken to implement a new intervention such as: acceptability, adoption, appropriateness, uptake, feasibility, fidelity, implementation cost, penetration, and sustainability of the evidence-based rehabilitation interventions

- Service system outcomes - the effects of interventions on service outcomes such as: efficiency, safety, effectiveness, equity, patient centredness, timeliness of the evidence-based rehabilitation interventions

- Patient Outcomes -the effects of intervention on patient outcomes such as: changes in patient satisfaction function or symptomology as a result of the evidence-based rehabilitation interventions

Measuring implementation outcomes in addition to client or service system outcomes is crucial for distinguishing effective or ineffective programs that are well or poorly implemented. While all three clusters of implementation outcomes are key to focus on – this article will mainly focus on implementation outcomes. Box 12 provides definitions of implementation outcome dimensions adapted from Proctor et al. (2011)

Given that there are eight implementation outcomes, once again it can be overwhelming as to how and what outcome dimension to select. There are several factors to consider when choosing which implementation outcomes to evaluate:

- the specific barriers to implementation you have observed

- the novelty of the evidence-based practice you are trying to implement

- the setting in which implementation is taking place

- the resources for and quality of usual training for implementation

The stage of implementation and your unit of analysis can also influence. For example, acceptability may be more appropriate to study during early implementation and sustainability may be more appropriately measured later in the implementation process.

Implementation Fidelity[edit | edit source]

Fidelity translates as “faithfulness”; thus, fidelity of intervention means faithful and correct implementation of the key components of a defined intervention. Unless such an evaluation is made, it cannot be determined whether a lack of impact is due to poor implementation or inadequacies inherent in the intervention in the real-world setting. Evidence-based practice also assumes that an intervention is being implemented in full accordance with its published details. This is particularly important given the greater potential for inconsistencies in implementation of an intervention in real world rather than experimental conditions. Evidence-based practice needs a means of evaluating whether the intervention is actually being implemented as the designers intended.

Implementation fidelity can be described in terms of three key elements that need to be measured including:

- Adherence to an intervention - whether an intervention is being delivered as it was designed or written as far as content of the intervention; the exposure or dose of an intervention received by participants

- Intervention complexity - complex interventions have greater scope for variation in their delivery, therefore are more vulnerable to one or more components not being correctly implemented

- Facilitation strategies - the provision of manuals, guidelines, training, monitoring and feedback, capacity building, and incentives:

- Quality of delivery refers to the manner in which a practitioner or administrator or volunteer delivers an intervention

- Participant responsiveness measures how far participants respond to, or are engaged by, an intervention

It is easy to become overwhelmed given the large number of elements that may influence implementation fidelity. It is therefore beneficial to use a more comprehensive approach to evaluating the influence on the implementation fidelity outcomes. Box 13 provides several key implementation outcome (fidelity) evaluation questions.

Summary[edit | edit source]

Given the multiple challenges to implementing rehabilitation interventions it is important to think about and assess both the implementation process and the outcomes of your implementation process. To inform future implementation facilitation efforts, it is important to know not just what worked but how and why the selected implementation strategies performed.

It is important to distinguish implementation effectiveness from intervention effectiveness. Given that there are multiple implementation effectiveness outcomes be select about implementation outcomes to assess.

Resources[edit | edit source]

Recommended Reading:

- Allen CG, Barbero C, Shantharam S, Moeti R. Is theory guiding our work? A scoping review on the use of implementation theories, frameworks, and models to bring community health workers into health care settings.Journal of public health management and practice: JPHMP. 2019 Nov;25(6):571.

- Khadjesari Z, Boufkhed S, Vitoratou S, Schatte L, Ziemann A, Daskalopoulou C, Uglik-Marucha E, Sevdalis N, Hull L. Implementation outcome instruments for use in physical healthcare settings: a systematic review. Implementation Science. 2020 Dec;15(1):1-6.

Additional Implementation Outcome Measurement Tools:

Given the complexity of thinking and measuring implementation outcomes, for more detailed information about instruments to measure implementation outcomes please review:

- Washington University in St. Louis, Institute of Clinical and Translational Sciences: Implementation Outcomes Toolkit

- Implementation Outcome Repository [8]

- Center for Technology and Behavioral Health: Measures for Implementation Research [7]

References[edit | edit source]

- ↑ Atkins L, Francis J, Islam R, O’Connor D, Patey A, Ivers N, Foy R, Duncan EM, Colquhoun H, Grimshaw JM, Lawton R. A guide to using the Theoretical Domains Framework of behaviour change to investigate implementation problems. Implementation science. 2017 Dec;12(1):1-8.

- ↑ Hunter SC, Kim B, Mudge A, Hall L, Young A, McRae P, Kitson AL. Experiences of using the i-PARIHS framework: a co-designed case study of four multi-site implementation projects. BMC health services research. 2020 Dec;20(1):1-4.

- ↑ Roberts NA, Janda M, Stover AM, Alexander KE, Wyld D, Mudge A. The utility of the implementation science framework “Integrated Promoting Action on Research Implementation in Health Services”(i-PARIHS) and the facilitator role for introducing patient-reported outcome measures (PROMs) in a medical oncology outpatient department. Quality of Life Research. 2021 Nov;30(11):3063-71.

- ↑ Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. American journal of public health. 1999 Sep;89(9):1322-7.

- ↑ Bondarenko J, Babic C, Burge AT, Holland AE. Home-based pulmonary rehabilitation: an implementation study using the RE-AIM framework. ERJ open research. 2021 Apr 1;7(2).

- ↑ Allen CG, Barbero C, Shantharam S, Moeti R. Is theory guiding our work? A scoping review on the use of implementation theories, frameworks, and models to bring community health workers into health care settings. Journal of public health management and practice: JPHMP. 2019 Nov;25(6):571.

- ↑ 7.0 7.1 7.2 Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administration and policy in mental health and mental health services research. 2011 Mar;38(2):65-76.

- ↑ Khadjesari Z, Boufkhed S, Vitoratou S, Schatte L, Ziemann A, Daskalopoulou C, Uglik-Marucha E, Sevdalis N, Hull L. Implementation outcome instruments for use in physical healthcare settings: a systematic review. Implementation Science. 2020 Dec;15(1):1-6.